This has been the week of javascript for me, and it came together in my first few attempts to create an MVC client-side application using the Backbone.js library.

Defining the Models

I wrote a post about the MVC architecture, and if you aren’t familiar with the concepts on a basic level, go read it. I’ll be right here! Assuming you’re up to speed, my task was trying to decide how to represent the data our app needs to work with in models.

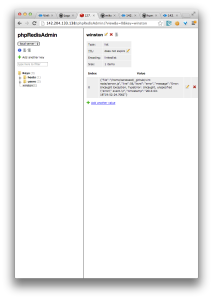

Our application uses data about two things, broadly speaking:

- Machines hosting libvirt

- The VMs being managed by each libvirt host

In light of this, my first thought was to create a model for each:

var Host = Backbone.Model.extend({

...

});

// &&

var Instance = Backbone.Model.extend({

...

});

Then all I would have to do is create a collection (like an array) of Instance models, and associate it with the Host model that represents the physical machine managing the actual VMs the collection represents. But how to associate the two? It would be clearly beneficial for there to be a programmatic relationship between each collection of Instance models and the appropriate Host model, but my options were limited by Backbone.js itself.

Backbone.js does not natively support relationships between models and models (like Instance and Host) or models and collections of a different model type (like a Host model and collection of Instance models). I did find a library to support this (backbone.relational), but we have deadlines to hit and I couldn’t afford to spend another half a day learning yet another library.

I pseudocoded the Instance and Host models to duplicate the information that binds them conceptually – the IP of the host machine. As you read it, keep in mind that the extend() method of Backbone.Model accepts a JSON object (a series of key/value pairs) to set features of the model being created:

var Host = Backbone.Model.extend({

initialize: function () {

if (!this.ip) { this.ip = 0; } // Safe state

}

});

// &&

var Instance = Backbone.Model.extend({

initialize: function () {

if (!this.hostIp) { this.hostIp = 0; } // Safe state

}

});

But, on thinking about it, I saw that to take this approach would effectively neuter Backbone.js – isn’t the point of a framework to keep me from having to manually manage relationships like this?

So I pseudocoded another possibility, where each Host model object contains a key for each VM on the machine it represents:

var Host = Backbone.Model.extend({

initialize: function () {

if (!this.ip) { this.ip = 0; } // Safe state

(this.getInstances = function() {

// AJAX API call to our interface-server to return VM data for the provided ip

var result = $.ajax("/list/vms/this.ip", ... );

// A fictional method that would parse VM data from the AJAX call

// and create keys within the model for each VM detailed

this.setInstanceData(result);

};) () // Immediate invocation after creating the method

}

});

This option appeared to be the lesser of the two evils. Strictly speaking, it would also break Backbone.js’s usefulness by ignoring an obvious candidate for a model (Virtual Machines), but also would prevent the user from having to keep track of which Instance collection was paired with which Host model without the relevant library.

In the end, we decided that I was right to forgo learning another library, and that my second approach would be the most reusable.

Host Model Implementation

Backbone.js provides a constructor for each model, where the user can pass key/value pairs and they will be assigned to the new model object as it is invoked. Then, after the constructor logic completes, Backbone.js looks to see if an “initialize” key was defined (like in my examples above) and runs that logic.

For the Host model, the initialize method needed to do three things:

- Ensure an IP was passed, and set a safe state if not

- Pull vital information about the host machine (cpu/memory usage etc.), assigning them as keys

- Pull information on all instances being managed by Libvirt on that host, and then assign them as keys for the model representing that host

AJAX & jQuery

At this point, setting a safe-state IP address was a cakewalk – as it should be. The other two required me to learn how to use jQuery’s AJAX method.

The jQuery library is a dependency for Backbone.js, and is available as a global object throughout an app using Backbone.js. To leverage this, I read the documentation for the AJAX method, and created the following pseudostructure for my AJAX calls:

$.ajax({

url: "apiCallGoesHere",

datatype: "json", // Defines the return datatype expected

cache: false, // Just in case

success: function() { successCaseLogicGoesHere },

error: function(textStatus) {

switch (textStatus) { // "textStatus" is the error code passed to this function

case "null":

case "timeout":

case "error":

case "abort":

case "parseerror":

default:

// Error logic will populate these cases

console.log("XX On: " + this.url + " XX");

console.log("XX Error, connection to interface-server refused XX");

break;

} // END SWITCH

} // END ERROR LOGIC

}); // END AJAX CALL

By bumbling around with my now intermediate understanding of JavaScript, I threw together a test version of the model, and ran into a few challenges:

The context of “this”

In JavaScript, nested functions lose access to the this attribute of their parent. This is a noted design flaw in the language, and made my code do some funny things until I tracked it down as the root of the problem. The solution was to define a that variable that contained a reference to the parent function, e.g.

var that = this;

…which would be accessible to all subfunctions as an alias for the original context of this.

Get/Set methods

Backbone.js model objects aren’t just key/values that the user defines – they inherit from the Backbone.Model object, which contains the constructor logic and more. Trying to define and retrieve attributes directly failed badly because the user-defined keys were actually stored as a JSON object under a key called attributes:

var host1 = new Host("0.0.0.0"); // Create new model

host1.ip = "2.3.4.5"; // works, but creates a new key because...

alert( host1.attributes.ip ); // 0.0.0.0

alert( host1.ip ); // 2.3.4.5

In order to take advantage of Backbone.js’s ability to use user-defined validation, the user has to use the provided set/get methods for the most secure, cohesive implementation of a Backbone.js powered app:

var host1 = new Host("0.0.0.0"); // Create new model

host1.set("ip", "2.3.4.5"); // BINGO

alert( host1.get("ip") ); // 2.3.4.5

The other benefit of using the get/set methods is that using them fires a “change” event, which can be used to trigger actions (like a re-rendering of the webpage to show the new information).

Redundant code

I repeated the same basic AJAX call structure at least four times before I decided to factor it out into a helper object called API. This way, each component of Backbone.js could use a standard method of making a call without duplicate code:

var API = {

// AJAX wrapper

callServer: function(call, success, error) {

$.ajax({

url: "/" + call,

datatype: "json",

cache: false,

success: success,

error: function(textStatus) {

// INTERFACE-SERVER ERROR HANDLING

switch (textStatus) {

case "null":

case "timeout":

case "error":

case "abort":

case "parsererror":

default:

console.log("XX On: " + this.url + " XX");

console.log("XX Error, connection to interface-server refused XX");

error();

break;

} // END-Switch

} // END-Error

}); // End ajax call

}, // END callServer function

} // END API object

Now it was as easy as:

API.serverCall("apiCallPath", function() { successCallbackLogic }, function() { errorCallbackLogic});

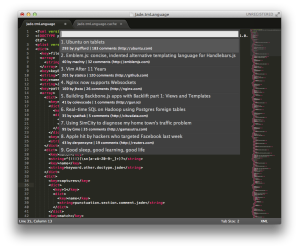

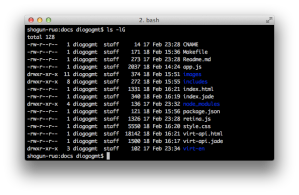

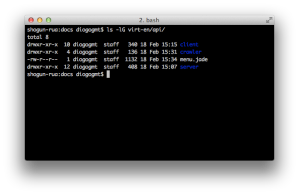

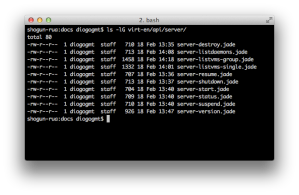

Defining a collection

The next challenge was to define a collection for Host model objects. To over-simplify it, a collection object is an array of a specified type of model object, along with methods for manipulating that array. In this case, we needed it to make an API call to find all the IPs of hosts running Libvirt, and then create a Host model for each one.

The logic was very simple, since collection objects support the initialize key in a similar fashion to the model objects.

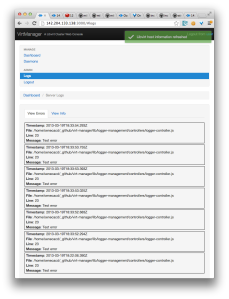

Making it run

By strategically adding console.log() calls, we were able to watch the app run – headfirst into a wall. Diogo wrote a post about that particular issue, and as we resolved it, we reflected on how inelegantly our Backbone.js app handled the error.

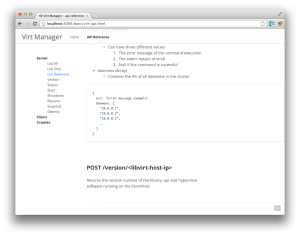

In response, we standardized the format of our API into a JSON object with the following attributes:

{

err: "errorCode",

data: {

dataKey: value,

... : ...,

...

}

}

…which simplified the implementation of error handling within our application.

Our next try still didn’t work, but showed us how beautifully the error-handling resolved.

Our console log:

[creating hosts] VNMapp.js:200

-begin collection calls VNMapp.js:182

Failed to load resource: the server responded with a status of 404 (Not Found) http://192.168.100.2/list/daemons/?_=1360940232244

XX On: /list/daemons/?_=1360940232244 XX VNMapp.js:23

XX Error, connection to interface-server refused XX VNMapp.js:24

XX Cannot find daemon-hosts! XX

Our next next try was our last – we had proof the app was working.

Our console log:

[creating hosts] VNMapp.js:210

-begin collection calls VNMapp.js:193

--Add Model | ip: 10.0.0.4 VNMapp.js:199

New Host!

IP:10.0.0.4 VNMapp.js:189

Next Steps

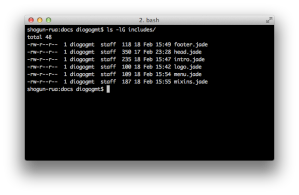

Next is developing a View object for each part of the app’s UI that will be dynamically updated, and splitting the static parts of the page into templates to make duplication and updating easier.

This will be covered in a further post, but so far we are happy with our work finally coming together!